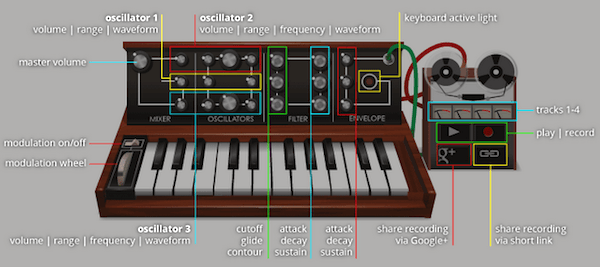

Yesterday we featured Google’s web-based analogue synth Moog tribute when we found it on the Japanese site several hours before it hit the rest of the world. We were so impressed with it that we decided to rip it apart to see how it works!

It celebrates the 78th anniversary of the birth of Bob Moog, inventor of the Moog synthesiser (pronounced Mogue, like vogue, so sadly doesn’t quite work as ‘Moogle’). They’re using the new, not-quite-a-standard-yet Web Audio API to create a fully-functional synthesiser.

Because the Web Audio API is so new, this synthesiser uses webkitAudioContext in browsers that support it and falls back to Flash 10 (or above) for everything other than Chrome.

The Web Audio API is an extremely powerful tool for controlling audio in the browser. It is based around the concept of Audio Routes which are a common tool in sound engineering. This is a simple, but powerful way of representing the connections between a sound source and a destination, in this case, your speakers. Between these two end points, you can connect any number of nodes which take the audio data passed in, manipulate it in some way and output it to which ever nodes are connected next in the chain.

The common node types found in sound engineering are all present. To control the volume, you would create a gain node and set the value then place this in between the source and destination. Similarly, you can create filter nodes – specifically Biquad filters – and connect these too. By connecting several of these nodes, you can have fine-grained control over the audio produced.

In this Moog synthesiser, Google are primarily making use of another type of node specific to the Web Audio API – JavaScriptAudioNode. This is a node which can be used for audio generation or processing. This means that the synthesiser doesn’t rely on sampled audio files but actually generates the original signal in real-time before passing it through the various filters.

If you dig into the large amount of JavaScript powering this doodle, you’ll find that the majority is actually dedicated to handling the UI. The Web Audio API provides a great abstraction of the complicated audio processing underneath that by simply allowing the user to play with the settings (via all the knobs), the amount of code dedicated to creating the sound is actually quite small. Relatively. There’s also a nice little feature that encodes the audio and allows you to share multi-track recordings like this one we made earlier.

I’m sure Robert Moog would have had a great time playing with the API.

[UPDATE] we just found even more info about this on the Google Doodle blog post.