Where’s that noise?

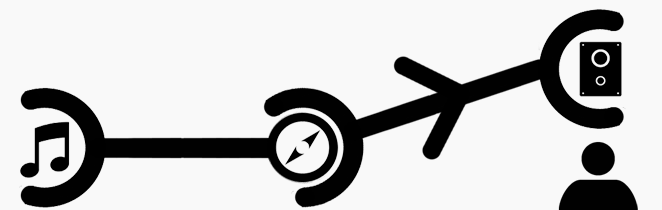

So far, all of our samples have been converted to mono (the second parameter in the context.createBuffer call). This means that there is no stereo effect at all – everything sounds as if the sound were generated between your ears. By converting the source to mono, we give ourselves more control over where we want it to come from.

The Web Audio API provides tools to allow you to position both the listener and the sound sources within a 3D environment. By providing these, you (the coder) don’t need to worry at all about relative locations, distance vectors or anything like that.

The Listener

As there is only one final destination for the sound, there is only one ‘Listener’ at that destination. You can position the listener by specifying an x, y, z coordinate.

/* Create sound source as before... ... */ soundSource.connect(context.destination); context.listener.setPosition(20, -5, 0); |

Note that the listener is not a node. The position of the single listener with the 3D environment is a property of the AudioContext. This will position the listener 20 units along in the positive X direction, 5 in the negative Y direction and no change in the Z direction. By default, the listener is positioned at (0, 0, 0), predictably.

From a more practical point of view, picture a 3D game environment. By updating the position of the AudioContext listener every time your player character moves, you pass the tricky business of handling the loudness and directionality of various environmental noises over to the Web Audio API.

Panner Node

This is the other half of the positional audio control. The PannerNode allows you to specify the apparent location of the sound source. You could use this to specify the location of a moving non-player character. All the directionality and distance fall-off calculation will be handled by the API so you don’t need to worry about it.

panner = context.createPanner(); panner.setPosition(10, 5, 0); soundSource.connect(panner); panner.connect(context.destination); |

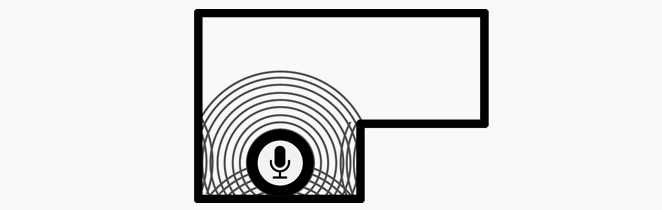

What’s an Impulse Response?

I’m glad you asked. If you were to follow the Wikipedia definition, you might get a bit confused. One way of looking at it is that an impulse response measures how a particular system reacts when faced with an measurable external force. A much simpler way to think of them is “An Acoustic Photograph”. An impulse response contains information about the acoustic properties of a room, concert hall, forest, wherever. The impulse response of a tiled bathroom is a wave that contains information about each little echo coming from the tiles or the way the towel rack absorbs sounds. The impulse response of a forest, however, will contain very little echo, maybe only a subtle one coming from the leaves high above.

it’s important to remember that the impulse response doesn’t contain any noise from the location in which it was created, there are no creaking trees in the forest wave, it simply takes a snapshot of the way the acoustics work there.

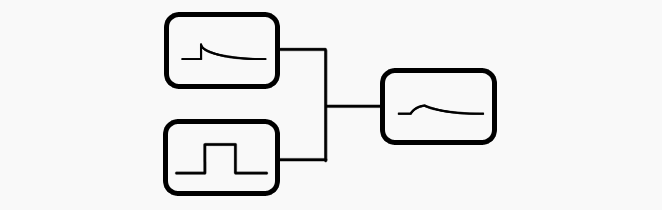

Convolution

Yet more clever mathematics that we will gloss over and simplify greatly. Convolution of two waves simply “smooshes” them together. This is different from adding the waves together which would produce a single output sounding kind of like both played at the same time, this takes properties of the two waves and combines them to make the output wave. In essence, it can take the acoustic properties of an impulse response and “smoosh” them onto a different audio signal recreating the original sound but with the echoes, dampening and everything else of the measured location.

Start with a recording of a ticking clock without any echo. Convolve that recording with an impulse response recorded in an echoey concert hall. The output now sounds like a ticking clock in the middle of an echoey concert hall.

Take the same original noise and convolve it with the impulse response of a broom closet. You now have a ticking clock sounding as though it was recorded in a broom closet.

As always, check out Wikipedia for more detailed information on convolution.

Enter the Convolver

There is a good reason we’ve gone over the two previous topics. This is yet another thing you can do with the Web Audio API. Neat.

As with our original sound source, we can load an impulse response via XHR and then use a convolver object to apply that response to our audio data using another node in the graph.

/* Create sound source as before... ... */ // Again, the context handles the difficult bits var convolver = context.createConvolver(); // Wiring soundSource.connect(convolver); convolver.connect(context.destination); // load the impulse response asynchronously var request = new XMLHttpRequest(); request.open("GET", "impulse-response.mp3", true); request.responseType = "arraybuffer"; request.onload = function () { context.decodeAudioData(request.response, function(buffer) { convolver.buffer = convolverBuffer; playSound(); }); } request.send(); |

Three people singing in a Church

Three people singing in a dustbin

Summary

We now have sounds that we can move around a 3D environment and the ability to make that 3D environment sound like any shape we like. It’s only taken us a couple dozen lines of code and we already have an immersive, interactive audio environment. Not too bad.

The next steps will involve creating our own audio data from scratch rather than relying on prerecorded samples.

Attributions

- Impulse responses from Fokke van Saane

- “Hello, Hello, Hello” from freesound.org

- “Speaker” symbol by Okan Benn, from thenounproject.com.