The Web Audio API is one of two new audio APIs – the other being the Audio Data API – designed to make creating, processing and controlling audio within web applications much simpler. The two APIs aren’t exactly competing as the Audio Data API allows more low-level access to audio data although there is some overlap.

At the moment, the Web Audio API is a WebKit-only technology while the Audio Data API is a Mozilla thing. It was recently announced that iOS 6 will have Web Audio API support, however, so there’s mobile support on the way.

In this page, we will start at the very beginning and work though the basic concepts until we have a working example.

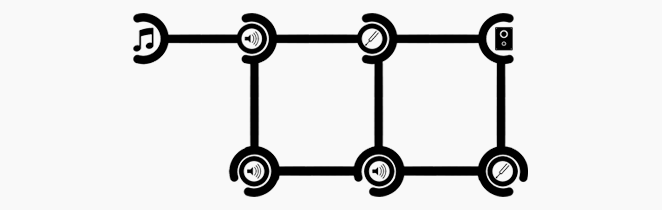

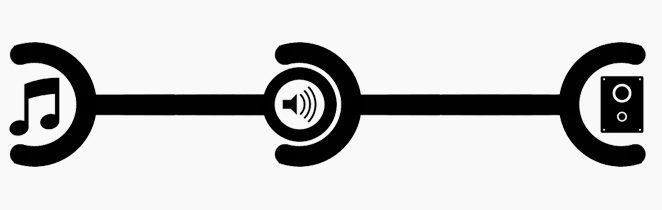

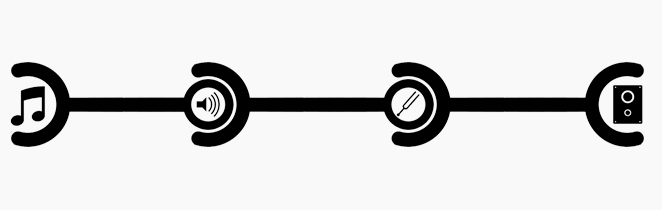

Audio routing graphs

The Web Audio API is an extremely powerful tool for controlling audio in the browser. It is based around the concept of Audio Routes which are a common tool in sound engineering. This is a simple, but powerful way of representing the connections between a sound source and a destination, in this case, your speakers. Between these two end points, you can connect any number of nodes which take the audio data passed in, manipulate it in some way and output it to which ever nodes are connected next in the chain.

There can be only one!

AudioContext, that is. Unlike canvases and canvas contexts, there can be only one AudioContext per page. This doesn’t prove to be a limitation as you can easily create multiple, completely separate Audio Graphs within the context. Essentially, the context object acts as a holder for the API calls and provides the abstraction required to keep the process simple.

Even though this is only supported in WebKit at the moment, this snippet will ensure we’re prepared for future developments.

var context; if (typeof AudioContext !== "undefined") { context = new AudioContext(); } else if (typeof webkitAudioContext !== "undefined") { context = new webkitAudioContext(); } else { throw new Error('AudioContext not supported. :('); } |

Create a sound source

Unlike working with audio elements, you can’t simply set the source and have it load. Most often, you will load the audio file with an XMLHttpRequest and an asynchronous callback.

var request = new XMLHttpRequest(); request.open("GET", audioFileUrl, true); request.responseType = "arraybuffer"; // Our asynchronous callback request.onload = function() { var audioData = request.response; createSoundSource(audioData); }; request.send(); |

The AudioContext provides useful methods to simplify downloading remote resources via stream buffers. Use the received audioData to create the full sound source. We’ll look at the ‘make mono’ parameter later.

// create a sound source soundSource = context.createBufferSource(); // The Audio Context handles creating source // buffers from raw binary data context.decodeAudioData(audioData, function(soundBuffer){ // Add the buffered data to our object soundSource.buffer = soundBuffer; }); |

Connect the source to the destination

This is where we start to create our Audio Routing Graphs. We have our sound source and the AudioContext has its destination which, in most cases, will be your speakers or headphones. We now want to connect one to the other. This is essentially nothing more than taking the cable from the electric guitar and plugging it into the amp. The code to do this is even simpler.

soundSource.connect(context.destination); |

That’s it. Assuming you’re using the same variable names as above, that’s all you need to write and suddenly your sound source is coming out of the computer. Neat.

Create a node

Of course, if it were simply connecting a sound to a speaker, we wouldn’t have any control over it at all. Along the way between start and end, we can create and insert ‘nodes’ into the chain. There are many different kinds of nodes. Each node either creates or receives and audio signal, processes the data in some way and outputs the new signal. The most basic is a GainNode, used for volume.

// Create a volume (gain) node volumeNode = context.createGain(); //Set the volume volumeNode.gain.value = 0.1; |

Chain everything together

We can now put our Gain in the chain by connecting the sound source to the Gain then connecting the Gain to the destination.

soundSource.connect(volumeNode); |

Lengthy chains

Another common type of node is the BiquadFilter. This is a common feature of sound engineering which, through some very impressive mathematical cleverness, provides a lot of control over the audio signal by exposing only a few variables.

This is not necessarily the best place to go into detail but here’s a quick summary of the available filters. Each of them takes a frequency value and they can optionally take a Q factor or a gain value, depending on the type of filter.

Lowpass

Sounds below the supplied frequency are let through, sounds above are quietened. The higher, the quieter.

Highpass

Sounds above the supplied frequency are let through, sounds below are quietened. The lower, the quieter.

Bandpass

Sounds immediately above and below the supplied frequency are let through. Sounds higher and lower than a certain range (specified by the Q factor) are quieter.

Lowshelf

All sounds are let through, those below the given frequency are made louder.

Highshelf

All sounds are let through, those above the given frequency are made louder.

Peaking

All sounds are let through, those on either side of the given frequency are made louder.

Notch

Opposite of Bandpass. Sounds immediately above and below the supplied frequency are made quieter. Sounds higher and lower than a certain range (specified by the Q factor) are louder.

Allpass

Changes the phase between different frequencies. If you don’t know what it is, you probably don’t need it.

Connecting these filter nodes is as simple as any other.

filterNode = context.createBiquadFilter(); // Specify this is a lowpass filter filterNode.type = 0; // Quieten sounds over 220Hz filterNode.frequency.value = 220; soundSource.connect(volumeNode); volumeNode.connect(filterNode); filterNode.connect(context.destination); |

Done

By now, you should have a working sample of the Web Audio API in front of you. Nice job.

We have, however, only scratched the surface of the API. We’ll go into that more soon.

Attributions

- “Hello, Hello, Hello” sample from freesound.org

- “Speaker” symbol by Okan Benn, from thenounproject.com.